In today’s digital world, the question is no longer if minors use social media but how they use it.

Social media platforms don’t just host young users, they shape their experiences through algorithmic feeds and “addictive” design features that keep kids scrolling long after bedtime. As the mental health toll becomes increasingly clear, lawmakers are stepping in to limit how much control these platforms have over young minds.

What is an “addictive” feed and why target it?

Algorithms don’t just show content, they promote it. By tracking what users click, watch, or like, these feeds are designed to keep attention flowing. For minors, that means endless scrolling and constant engagement which typically is at the expense of sleep, focus, and self-esteem.

Under New York’s Stop Addictive Feeds Exploitation (SAFE) for Kids Act, lawmakers found that:

“social media companies have created feeds designed to keep minors scrolling for dangerously long periods of time.”

The Act defines an “addictive feed” as one that recommends or prioritizes content based on data linked to the user or their device.

The harms aren’t hypothetical. Studies link heavy social media use among teens with higher rates of depression, anxiety, and sleep disruption. Platforms often push notifications late at night or during school hours. Times when young users are most vulnerable.

Features like autoplay, for you page, endless “you may also like” suggestions, and quick likes or comments can trap kids in an endless scroll. What begins as fun and harmless entertainment soon becomes a routine they struggle to escape.

Key Developments in Legislation

It’s no surprise that minors exposure to social media algorithms sits at the center of today’s policy debates. Over the past two years, state and federal lawmakers have introduced laws seeking to rein in the “addictive” design features of online platforms. While many of these measures face ongoing rule making or constitutional challenges, together they signal a national shift toward stronger regulations of social media’s impact on youth.

Let’s take a closer look at some of the major legal developments shaping this issue.

New York’s SAFE for Kids Act

New York’s Stop Addictive Feeds Exploitation (SAFE) for Kids Act represents one of the nation’s most ambitious efforts to regulate algorithmic feeds. The law prohibits platforms from providing “addictive feeds” to users under 18 unless the platform obtains verifiable parental consent or reasonably determines that the user is not a minor. It also bans push notifications and advertisements tied to those feeds between 12 a.m. and 6 a.m. unless parents explicitly consent. The rule making process remains ongoing, and enforcement will likely begin once these standards are finalized.

platform obtains verifiable parental consent or reasonably determines that the user is not a minor. It also bans push notifications and advertisements tied to those feeds between 12 a.m. and 6 a.m. unless parents explicitly consent. The rule making process remains ongoing, and enforcement will likely begin once these standards are finalized.

The Kids Off Social Media Act (KOSMA)

At the federal level, the Kids Off Social Media Act (KOSMA) seeks to create national baselines for youth protections online. Reintroduced to Congress, the bill would:

- Ban social media accounts for children under 13.

- Prohibit algorithmic recommendation systems for users under 17.

- Restrict social media access in schools during instructional hours.

Supporters argue the bill is necessary to counteract the addictive nature of social media design. Critics, including digital rights advocates, question whether such sweeping restrictions could survive First Amendment scrutiny or prove enforceable at scale.

KOSMA remains pending in Congress but continues to shape the national conversation about youth and online safety.

California’s SB 976

California’s Protecting Our Kids from Social Media Addiction Act (SB 976) reflects a growing trend of regulating design features rather than content. The law requires platforms to:

- Obtain parental consent before delivering addictive feeds to minors.

- Mute notifications for minors between midnight and 6 a.m. and during school hours unless parents opt in.

The statute is currently under legal challenge for potential First Amendment violations, however, the Ninth Circuit allowed enforcement of key provisions to proceed suggesting that narrowly tailored design regulations aimed at protecting minors may survive early constitutional scrutiny.

Other State Efforts

Other states are following suit. According to the National Conference of State Legislatures (NCSL), at least 13 states have passed or proposed laws requiring age verification, parental consent, or restrictions on algorithmic recommendations for minors. Mississippi’s HB 1126, for example, requires both age verification and parental consent, and the U.S. Supreme Court allowed the law to remain in effect while litigation continues.

Final Thoughts

We are at a pivotal moment. The era when children’s digital consumption went largely unregulated is coming to an end. The question now isn’t if regulation is on the horizon, it’s how it will take shape, and whether it can strike the right balance between safety, free expression, and innovation.

As lawmakers, parents, and platforms navigate this evolving landscape, one challenge remains constant: ensuring that efforts to protect minors from harmful algorithmic design do not come at the expense of their ability to connect, learn, and express themselves online.

What do you think is the right balance between protecting minors from harmful algorithmic exposure and preserving their access to social media as a space for connection and expression?

Omegle is a free video-chatting social media platform. Its primary function has become meeting new people and arranging “

Omegle is a free video-chatting social media platform. Its primary function has become meeting new people and arranging “

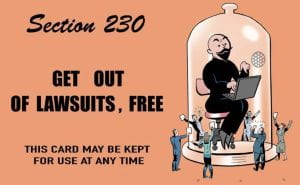

found that Section 230 immunity did not apply to Omegle in a

found that Section 230 immunity did not apply to Omegle in a