TAKING A STANCE ON DATA PRIVACY LAW

The digital age has brought with it unprecedented complexity surrounding personal data and the need for comprehensive data legislation. Recognizing this gap in legislative protection, New York has introduced the New York Privacy Act (NYPA), and the Stop Addictive Feeds Exploitation (SAFE) For Kids Act, two comprehensive initiatives designed to better document and safeguard personal data from the consumer side of data collection transactions. New York is taking a stand to protect consumers and children from the harms of data harvesting.

Currently under consideration in the Standing Committee on Consumer Affairs And Protection, chaired by Assemblywoman Nily Rozic, the New York Privacy Act was introduced as “An Act to amend the general business law, in relation to the management and oversight of personal data.” The NYPA was sponsored by State Senator Kevin Thomas and closely resembles the California Consumer Privacy Act (CCPA), which was finalized in 2019. In passing the NYPA, New York would become just the 12th state to adopt a comprehensive data privacy law protecting state residents.

DOING IT FOR THE DOLLAR

Companies buy and sell millions of user’s sensitive personal data in the pursuit of boosting profits. By purchasing personal user data from social media sites, web browsers, and other applications, advertisement companies can predict and drive trends that will increase product sales among different target groups.

Social media companies are notorious for selling user data to data collection companies, things such as your: name, phone number, payment information, email address, stored videos and photos, photo and file metadata, IP address, networks and connections, messages, videos watched, advertisement interactions, and sensor data, as well as time, frequency, and duration of activity on the site. The NYPA targets businesses like these by regulating legal persons that conduct business in the state of New York, or who produce products and services aimed at residents of New York. The entity that stands to be regulated must:

- (a) have annual gross revenue of twenty-five million dollars or more;

- (b) control or process personal data of fifty thousand consumers or more;

- or (c) derive over fifty percent of gross revenue from the sale of personal data.

The NYPA does more for residents of New York because it places the consumer first, as the Act is not restricted to regulating businesses operating within New York but encompasses every resident of New York State who may be subject to targeted data collection, an immense step forward in giving consumers control over their digital footprint.

MORE RIGHTS, LESS FRIGHT

The NYPA works by granting all New Yorkers additional rights regarding how their data is maintained by controllers to which the Act applies. The comprehensive rights granted to New York consumers include the right to notice, opt out, consent, portability, correct, and delete personal information. The right to notice requires each controller provide a conspicuous and readily available notice statement describing the consumer’s rights, indicating the categories of personal data the controller will be collecting, where its collected from, and what it may be used for. The right to opt out includes allowing for consumers to opt out of processing their personal data for the purposes of targeted advertising, the sale of their personal data, and for profiling purposes. This gives the consumer an advantage when browsing sites and using apps, as they will be duly informed of exactly what information they are giving up when online.

While all the rights included in the NYPA are groundbreaking for the New York consumer, the right to consent to sensitive data collection and the right to delete data cannot be understated. The right to consent requires controllers to conspicuously ask for express consent to collect sensitive personal data. It also contains a zero-discrimination clause to protect consumers who do not give controllers express consent to use their personal data. The right to delete requires controllers to delete any or all of a consumer’s personal data upon request, demanding controllers delete said data within 45 days of receiving the request. These two clauses alone can do more for New Yorker’s digital privacy rights than ever before, allowing for complete control over who may access and keep sensitive personal data.

BUILDING A SAFER FUTURE

Following the early success of the NYPA, New York announced their comprehensive plan to better protect children from the harms of social media algorithms, which are some of the main drivers of personal data collection. Governor Kathy Hochul, State Senator Andrew Gounardes, and Assemblywoman Nily Rozic recently proposed the Stop Addictive Feeds Exploitation (SAFE) For Kids Act, directly targeting social media sites and their algorithms. It has long been suspected that social media usage contributes to worsening mental health conditions in the United States, especially among youths. The SAFE For Kids Act seeks to require parental consent for children to have access to social media feeds that use algorithms to boost usage.

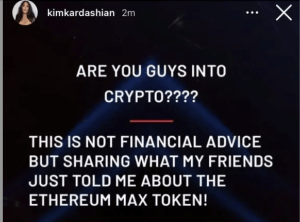

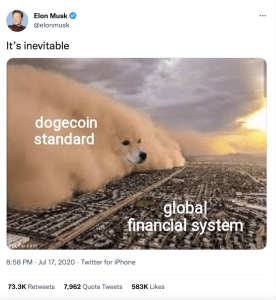

On top of selling user data, social media sites like Facebook, YouTube, and X/Twitter also use carefully constructed algorithms to push content that the user has expressed interest in, usually based on the profiles they click on or the posts they ‘like’. Social media sites feed user data to algorithms they’ve designed to promote content that will keep the user engaged for longer, which exposes the user to more advertisements and produces more revenue.

Children, however, are particularly susceptible to these algorithms, and depending on the posts they view, can be exposed to harmful images or content that can have serious consequences for their mental health. Social media algorithms can show children things they are not meant to see, regardless of their naiveté and blind trust, traits that are not exactly cohesive with internet use. Distressing posts or controversial images could be plastered across children’s feeds if the algorithm determines it would drive better engagement by putting them there. Under the SAFE For Kids Act, without parental consent, children on social media sites would see their feed in chronological order, and only see posts from users they ‘follow’ on the platform. This change would completely alter the way platforms treat accounts associated with children, ensuring they are not exposed to content they don’t seek out themselves. This legislation would build upon the foundations established by the NYPA, opening the door to even further regulations that could increase protections for the average consumer and more importantly, for the average child online.

Children, however, are particularly susceptible to these algorithms, and depending on the posts they view, can be exposed to harmful images or content that can have serious consequences for their mental health. Social media algorithms can show children things they are not meant to see, regardless of their naiveté and blind trust, traits that are not exactly cohesive with internet use. Distressing posts or controversial images could be plastered across children’s feeds if the algorithm determines it would drive better engagement by putting them there. Under the SAFE For Kids Act, without parental consent, children on social media sites would see their feed in chronological order, and only see posts from users they ‘follow’ on the platform. This change would completely alter the way platforms treat accounts associated with children, ensuring they are not exposed to content they don’t seek out themselves. This legislation would build upon the foundations established by the NYPA, opening the door to even further regulations that could increase protections for the average consumer and more importantly, for the average child online.

New Yorkers: If you have ever spent time on the internet, your personal data is out there, but now you have the power to protect it.

The sweeping transformation of social media platforms over the past several years has given rise to convenient and cost-effective advertising. Advertisers are now able to market their products or services to consumers (i.e. users) at

The sweeping transformation of social media platforms over the past several years has given rise to convenient and cost-effective advertising. Advertisers are now able to market their products or services to consumers (i.e. users) at  should

should  drink, Muse in a

drink, Muse in a

P.C. monitors in our day-to-day lives created a new opportunity for video game Developers. Slowly,

P.C. monitors in our day-to-day lives created a new opportunity for video game Developers. Slowly,  id Software, is a series that includes various FPS video games. Among the first, the Doom series introduced

id Software, is a series that includes various FPS video games. Among the first, the Doom series introduced  harass one-another too. Today, essentially all video games include some sort of communication tool. Users of most video games can usually chat, talk, or communicate with symbols or gestures with other users while also playing. Video games, however, are still socially considered games. To purchase, they are found in the video-game isle of

harass one-another too. Today, essentially all video games include some sort of communication tool. Users of most video games can usually chat, talk, or communicate with symbols or gestures with other users while also playing. Video games, however, are still socially considered games. To purchase, they are found in the video-game isle of  Amendment speech that has permitted, and continues to permit, societal issues of verbal racial discrimination and harassment to grow.

Amendment speech that has permitted, and continues to permit, societal issues of verbal racial discrimination and harassment to grow. by game developers and software creators. But rather, the plight of violent and toxic communication and its impact on society is left in the hands of the gamer itself. It’s now up to @B100DpR1NC3$$ to bring justice for @PIgSl@y3r’s potty mouth by effectively remembering to submit a complaint after he finished slaying the dragon and never knowing or remembering to check if the user was ever banned.

by game developers and software creators. But rather, the plight of violent and toxic communication and its impact on society is left in the hands of the gamer itself. It’s now up to @B100DpR1NC3$$ to bring justice for @PIgSl@y3r’s potty mouth by effectively remembering to submit a complaint after he finished slaying the dragon and never knowing or remembering to check if the user was ever banned.

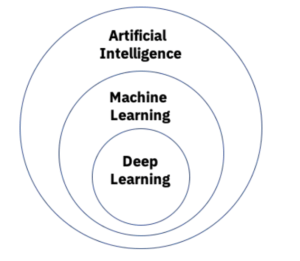

exactly team-players. These teams instead rely on the leg work of in-game reports by actively-playing gamers, and artificial intelligence. As it turns out, the anti-toxicity team doesn’t even play the game. The team, as acknowledged by Activision, merely review reports that have already been submitted by game players.

exactly team-players. These teams instead rely on the leg work of in-game reports by actively-playing gamers, and artificial intelligence. As it turns out, the anti-toxicity team doesn’t even play the game. The team, as acknowledged by Activision, merely review reports that have already been submitted by game players.

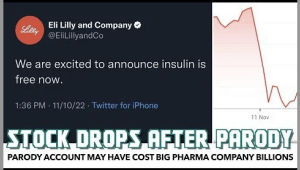

Another e-personator used their verification to impersonate former United States President

Another e-personator used their verification to impersonate former United States President