What thinks like a human, acts like a human, and now even speaks like a human…but isn’t actually human? The answer is: Artificial Intelligence.

Yes, that’s right, the futuristic self-driving smart cars, talking robots, and video calling that we once saw in the Jetsons TV Show are now more or less a reality in 2022. Much of this is thanks to the development of Artificial Intelligence.

What is Artificial Intelligence?

Artificial Intelligence (AI) is an umbrella term that has many sub-definitions. Scientists have not yet fully agreed upon one single definition, but AI generally refers to a phrase coined by Stanford Professor John McCarthy…all the way back in 1955. McCarthy defined artificial intelligence as “the science and engineering of making intelligent machines”. He then went on to invent the list processing language LISP, which is now used by numerous industry leaders including Boeing (Boeing Simplified English Checker assists aerospace technical writers) and Grammarly (a grammar computer add-on that many of us use, and that coincidentally, I am using as I write this piece). McCarthy is thought of as one of the founders of AI and recognized for his contributions to machine language.

Sub Categories and Technologies

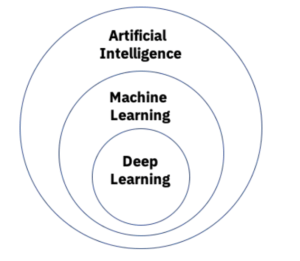

Within the overarching category of AI are smaller subcategories such as Narrow AI and Strong AI. Beneath the subcategories are the technologies of Machine Learning and Algorithms that help the subcategories function and meet their objectives.

Narrow AI: Also known as “weak AI” is task-focused intelligence. These systems only focus on specific jobs like internet searches or autonomous driving rather than complete human intelligence. Examples of this are Apple’s Siri, Amazon Alexa, and autonomous vehicles.

General AI: Also known as “strong AI”, is the overall combined AI components that rival a human’s ability to think for themselves. Think the robots in your favorite science-fiction novel. Science today still seems to be far from reaching General AI, as it proves to be much more difficult to develop as opposed to Narrow AI.

Technologies within AI Subcategories.

Machine Learning requires human involvement to learn. Humans create hierarchiesand pathways for both data input and outputs. These pathways allow the machine to learn with human intervention, but this requires more structured data for the computer.

Deep Learning allows the machine to make the pathway decisions by itself without human intervention. Between the simple input and output layers are multiple hidden layers referred to as a “neural network”. This network can receive unstructured raw data such as images and text and automatically distinguish them from each other and how they should be processed.

Both Machine and Deep Learning have allowed businesses, healthcare, and other industries to flourish from the increased efficiency and time saved through minimizing human decisions. It is possible that because this technology is so new and unregulated, we have been able to see how fast innovation can grow uninhibited. Government regulations have been hesitant to tread in the murky waters of this new and unknown technology sector

Regulations. Currently, there is no federal law regulating the use of AI. States seem to be in a trial-and-error phase, attempting to pass a range of laws. Many of these laws attempt to deploy AI-specific task forces to monitor and evaluate AI use in that state or prohibit the use of algorithms in a way that unfairly discriminates based on ethnicity, race, sex, disability, or religion. A live list of pending failed and enacted AI legislation in each state can be found here on the National Conference of State Legislatures’ website.

But what must go up, must come down. While AI increases efficiency and convenience, it also poses a variety of ethical concerns, making it a double-edged sword. We explore the ups and downs of AI below and pose ethical questions that might make you stop and think twice about letting robots control our world.

Employment

With AI emerging in the workforce, many are finding that administrative and mundane tasks can now be automated through the use of AI. Smart Contract systems allow for Optical Character Recognition (OCR) which can scan documents and recognize text from the uploaded image. The AI can then pull-out standard clauses or noncompliant language and flag it for human review. This, however, still ultimately requires human intervention.

One growing concern with AI and employment lies in the possibility that AI may take over certain jobs completely. An example of this comes with the innovation of self-driving cars and truck drivers. If autonomous vehicles become mainstream for the large-scale transportation of goods, what will happen to those who once held this job? Does the argument that there may be “fewer accidents” outweigh the unemployment that accompanies this switch? And what if the AI fails? Could there be more accidents?

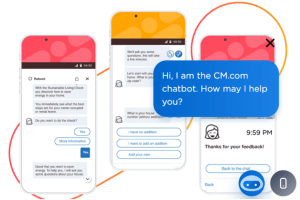

Chatbots

Chatbots are computer programs designed to simulate human communication. We see these types in online customer service settings. The AI allows customers to hold a conversation with the Chatbot and ask questions about a specific product and receive instant feedback. This cuts down on waiting times and improves service levels for the company.

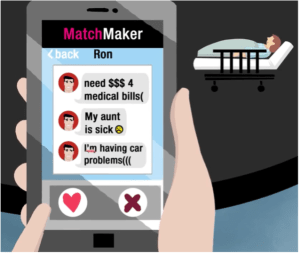

While customer service Chatbots may not spark any concern to the average consumer, the fact that these bots are able to engage in conversation that is almost indistinguishable from an actual human may pose a threat to other industries. We can forget about catfishing, now individuals will have to worry about if the “person” on the other side of their chatroom is even a “person” at all, or if it is someone who has designed a bot to elicit emotional responses from victims and eventually scam them out of their money.

Privacy

AI now gives consumers the ability to unlock their devices with facial recognition. It can also use these faces to recognize people in photos and tag them on social media sites. Aside from our faces, AI follows our behaviors and slowly learns our likes and dislikes, building a profile on us. Recently, the Netflix documentary “The Social Dilemma” discussed the controversy surrounding AI and Social Media use. In this film, we see the algorithm as three small men “inside the phone” who begin to build a profile on one of the main characters, sending notifications during periods of inactivity from apps that are likely to generate a response. With AI there seems to be a very fine line of what information is left undisclosed. We must be diligently aware of what we are opting into (or out of) to protect our personally identifiable information. While this may not be a major concern of those in the United States, it may raise concerns for civilians in foreign countries under a dictatorship that may use facial recognition as a tool to retain ultimate control.

Spread of Disinformation and Bias

AI is only as smart as the data it learns from. If it is fed data with a discriminatory bias or any bias at all (be it political, musical, or even your favorite movie genre) it will begin to make decisions based on that information.

We see the good in this – new movie suggestions in your favorite genre, advertising a sweater that you didn’t know you needed – but we have also seen the spread of false information across social media sites. Oftentimes, algorithms will only show us news from sources that align with our political affiliation because that is whom we tend to follow and engage with. This leaves us with a one-sided view of the world and grows the gap between parties even further.

As AI develops, we will be faced with new ethical questions every day. How do we prevent bias when it is almost human nature to begin with? How do we protect individuals’ privacy while still letting them enjoy the convenience of AI technology?

Can we have our cake and eat it too? Stay tuned in the next few years to find out…

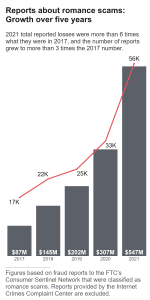

nd would eventually plead guilty to two federal felonies. Glenda was a victim of a Romance Scam and paid the ultimate price.

nd would eventually plead guilty to two federal felonies. Glenda was a victim of a Romance Scam and paid the ultimate price.

Prevent

Prevent