Introduction

In recent years, social media has taken a hold on nearly every aspect of human interaction and turned the way we communicate on its head. Social media apps’ high speed capability of disseminating information instantaneously have affected the way many sectors of business operate. From entertainment, social, environmental, educational, or financial, social media has bewildered the legal departments of many in house general counsels across all industries. Additionally, the generational shaft between the person actually posting for the account versus their supervisor has only exacerbated the potential for communications to miss their mark and cause controversy or adverse effects.

These days, most companies have social media accounts, but not all accounts are created equal, and they certainly are not all monitored the same. In most cases, these accounts are not regulated at all except by their own internal managers and #CancelCulture. Depending on the product or company, social media managers have done their best to stay abreast of changes in popular hashtags, trends and challenges, and the overall shift from a corporate tone of voice to one of relatability–more Gen-Z-esque, if you will. But with this shift, the rights and implications of corporate speech through social media has been put to the test.

Changes in Corporate Speech on Social Media

In the last 20 years, corporate use of social media has become a battle of relevance. With the decline of print media, social media, and its apps, have emerged as a marketing necessity. Early social media use was predominantly geared towards social purposes. If we look at the origins of Facebook, Myspace, and Twitter it is clear that these apps were intended for superficial uses—not corporate communications—but this all changed with the introduction of LinkedIn, which sparked a dynamic shift towards business and professional use of social media.

Today social media is used to report on almost every aspect of our lives, from disaster preparation and emergency responses to political updates, to dating and relationship finders, and customer service based tasks, social media truly covers all. It is also more common now days to get backlash for not speaking out or using social media after a major social or political movement occurs. Social media is also increasingly being used for research with geolocation technology, for organizing demonstrations and political unrest, and in the business context, for development in sales, marketing, networking, and hiring or recruiting practices.

These changes are starting to lead to significant conversations in the business world when it comes to company speech, regulated disclosures and First Amendment rights. For example, so far, there is minimal research on how financial firms disseminate communications to investor news outlets via social media and in which format they are being responded to. And while some may view social media as an opportunity to further this kind of investor outreach, others have expressed concerns that disseminating communications in this manner could result in a company’s loss of control over such communications entirely.

The viral nature of social media allows not just investors to connect more easily with companies but also with individuals who may not directly follow that company and would therefore be a lot less likely to be informed about a company’s prior financial communications and the importance of any changes. This creates risk for a company’s investor communications via social media because of the potential to spread and possibly reach uniformed individuals which could in turn produce adverse consequences for the company when it comes to concerns about reliance and misleading information.

Corporate Use, Regulations, and Topics of Interest on Social Media

With the rise of social media coverage on various societal issues, these apps have become a platform for news coverage, political movements, and social concerns and, for some generations, a platform that replaces traditional news media almost entirely. Specifically, when it comes to the growing interest in ESG related matters and sustainable business practices, social media poses as a great tool for information communication. For example, the Spanish company Acciona has recently been reported by the latest Epsilon Icarus Analytics Panel on ESG Sustainability, as having Spain’s highest resonating ESG content of all their social networks. Acciona demonstrates the potential leadership capabilities for a company to fundamentally impact and effectuate digital communications on ESG related topics. This developing content strategy focuses on brand values, and specifically, for Acciona, strong climate-change based values, female leadership, diversity, and other cultural, societal changes which demonstrates this new age of social media as a business marketing necessity.

Consequentially, this shift in usage of social media and the way we treat corporate speech on these platforms has left room for emerging regulation. Commercial or corporate speech is generally permissible under Constitutional Free Speech rights, so long as the corporation is not making false or misleading statements. Section 230 provides broad protection to internet content providers from accountability based on information disseminated on their platform. In most contexts, social media platforms will not be held accountable for the consequences resulting therefrom (i.e. a bad user’s speech). For example, a recent lawsuit was dismissed in favor of the defendant, TikTok, and its parent company, after a young girl died from participation in a trending challenge that went awry because under § 230 the platform was immune from liability.

In essence, when it comes to ESG-related topics, the way a company handles its social media and the actual posts they put out can greatly affect the company’s success and reputation as often ESG focused perspectives affect many aspects of the operation of the business. The type of communication, and coverage on various issues, can impact a company’s performance in the short term and long term hemispheres–the capability of which can effectuate change in corporate environmental practices, governance, labor and employment standards, human resource management and more.

With ESG trending, investors, shareholders, and regulators now face serious risk management concerns. Companies must now, more publicly, address news concerning their social responsibilities, on a much more frequent basis as ESG concerns continue to rise. Public company activities, through Consumer Service Reports, are mandated in annual 10-K filings and disclosures by the SEC, along with ESG disclosures thanks to a recent rule promulgation. These disclosures are designed to hold accountable and improve environmental, social, and economic performance when it comes to their respective stakeholders’ expectations.

Conclusion

In conclusion, social media platforms have created an entirely new mechanism for corporate speech to be implicated. Companies should proceed cautiously when covering social, political, environmental, and related concerns and their methods of information dissemination as well as the possible effects their posts may have on business performance and reputation overall.

Last month, I wrote a

Last month, I wrote a

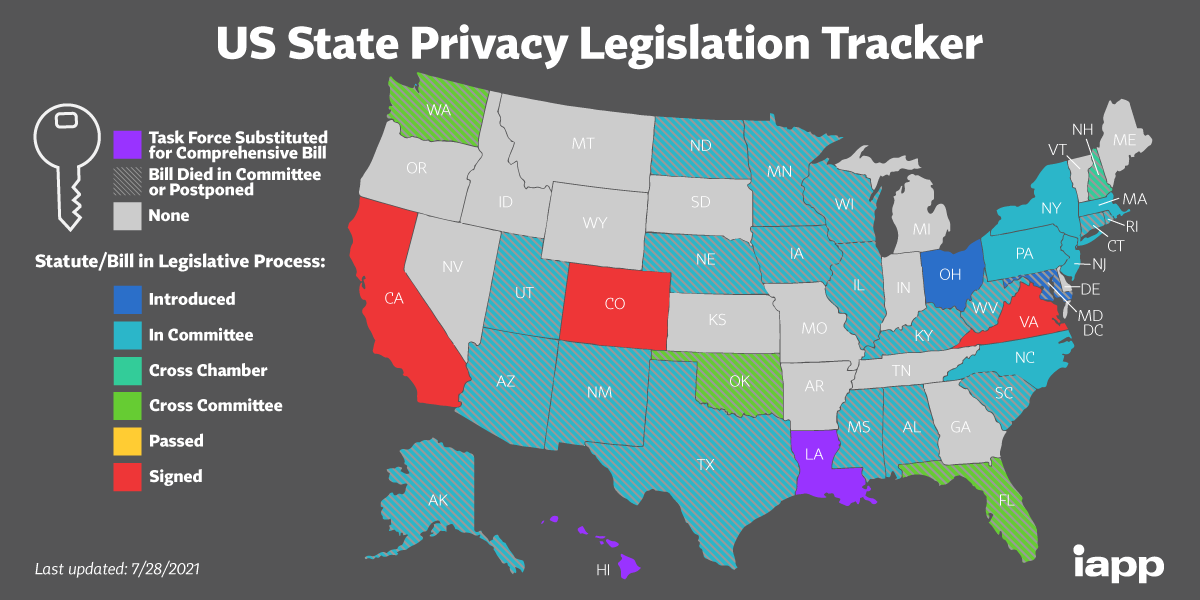

So far only three states, California, Colorado and Virginia have actually enacted comprehensive consumer data privacy laws according to the

So far only three states, California, Colorado and Virginia have actually enacted comprehensive consumer data privacy laws according to the