The #hashtag is an important marketing tool that has revolutionized how companies conduct business. Essentially, hashtags serve to identify or facilitate a search for a keyword or topic of interest by typing a pound sign (#) along with a word or phrase (e.g., #OOTD or #Kony2012). Placing a hashtag at the beginning of a word or phrase on Twitter, Instagram, Facebook, TikTok, etc., turns the word or phrase into a hyperlink attaching it to other related posts, thus driving traffic to users’ sites. This is a great way to promote a product, service or campaign while simultaneously reducing marketing costs and increasing brand loyalty, customer engagement, and, of course, sales. But with the rise of this digital “sharing” tool comes a new wave of intellectual property challenges. Over the years, there has been increasing interest in including the hashtag in trademark applications.

#ToRegisterOrNotToRegister

According to the United States Patent and Trademark Office (USPTO), a term containing the hash symbol or the word “hashtag” MAY be registered as a trademark. The USPTO recognizes hashtags as registrable trademarks “only if [the mark] functions as an identifier of the source of the applicant’s goods or services.” Additionally, Section 1202.18 of the Trademark Manual of Examining Procedure (TMEP) further explains that “when examining a proposed mark containing the hash symbol, careful consideration should be given to the overall context of the mark, the placement of the hash symbol in the mark, the identified goods and services, and the specimen of use, if available. If the hash symbol immediately precedes numbers in a mark, or is used merely as the pound or number symbol in a mark, such marks should not necessarily be construed as hashtag marks. This determination should be made on a case-by-case basis.”

Like other forms of trademarks, one would seek registration of a hashtag in order to exclude others from using the mark when selling or offering the goods or services listed in the registration. More importantly, the existence of the trademark would serve in protecting against consumer confusion. This is the same standard that is applied to other words, phrases, or symbols that are seeking trademark registration. The threshold question when considering whether to file a trademark application for a hashtag is whether the hashtag is a source identifier for goods or services, or whether it merely describes a particular topic, movement, or idea.

#BarsToRegistration

Merely affixing a hashtag to a mark does not automatically make it registerable. For example, in 2019, the Trademark Trial and Appeal Board (TTAB) denied trademark registration for #MAGICNUMBER108 because it did not function as a trademark for shirts and is therefore not a source identifier. Rather, the TTAB found that the social media evidence suggests that the public sees the hashtag as a “widely used message to convey information about the Chicago Cubs baseball team”, namely, their 2016 World Series win after a 108-year drought. The TTAB went on to say that just because the mark is unique doesn’t mean that the public would perceive it is an indication of a source. This further demonstrates the importance of a goods- source association of the mark.

Hashtags that would not function as trademarks are those simply relating to certain topics that are not associated with any goods or services. So, for example, cooking: #dinnersfortwo, #mealprep, or #healthylunches. These hashtags would likely be searched by users to find information relating to cooking or recipe ideas. When encountering these hashtags on social media, users would probably not link them to a specific brand or product. On the contrary, hashtags like #TheSaladLab or #ChefCuso would likely be linked to specific social media influencers who use that mark in connection with their goods and services and as such, could function as a trademark. Other examples of hashtags that would likely function as trademarks are brands themselves (#sephora, #prada, or #nike). Even slogans for popular brands would suffice (#justdoit, #americarunsondunkin, or #snapcracklepop).

#Infringement

What makes trademarked hashtags unique from other forms of trademarked material is that hashtags actually serve a purpose other than just identifying the source of the goods- they are used to index key words on social media to allow users to follow topics they are interested in. So, does that mean that using a trademarked hashtag in your social media post will create a cause of action for trademark infringement? The answer to this question is every lawyer’s favorite response: it depends. Sticking with the example above, assuming #TheSaladLab is a registered trademark, referencing the tag in this blog post alone would likely not warrant a trademark infringement claim, but if I were to sell kitchen tools or recipe books with the tag #TheSaladLab, that might rise to the level of infringement. However, courts are still unclear about the enforceability of hashtagged marks. In 2013, a Mississippi District Court stated in an order that “hashtagging a competitor’s name or product in social media posts could, in certain circumstances, deceive consumers.” The court never actually made a ruling on whether the use of the hashtag was actually infringing the registered mark.

This is problematic because on one hand, regardless of whether there is a hashtag in front of the mark, the owner of a registered trademark is entitled to bring a cause of action for trademark infringement when someone else uses their mark in commerce without their permission in the same industry. On the other hand, when one uses a trademark with the “#” symbol in front of it for the purposes of sharing information on social media, they are simply complying with the norms of the internet. The goal is to strike a balance between protecting the rights of IP owners and also protecting the rights of users’ freedom of expression on social media.

While the courts are somewhat behind in dealing with infringement relating to hashtagged trademark material, for the time being, various social media platforms (Instagram, Facebook, Twitter, YouTube) have procedures in place that allow users to report misuse of trademark-protected material or other intellectual property-related concerns.

Last month, I wrote a

Last month, I wrote a

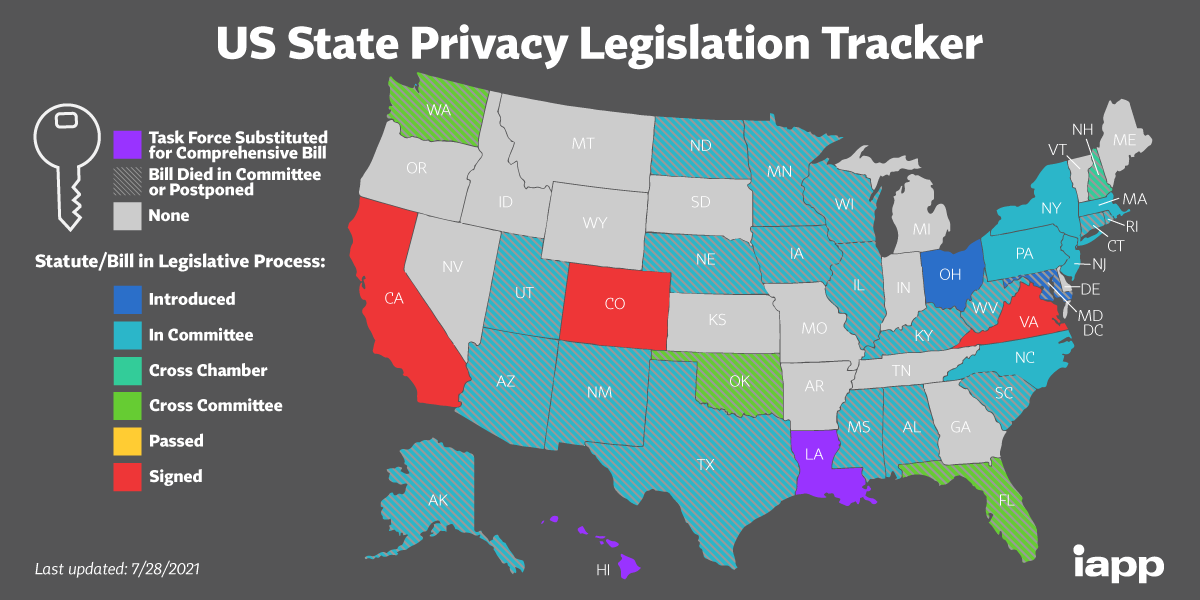

So far only three states, California, Colorado and Virginia have actually enacted comprehensive consumer data privacy laws according to the

So far only three states, California, Colorado and Virginia have actually enacted comprehensive consumer data privacy laws according to the