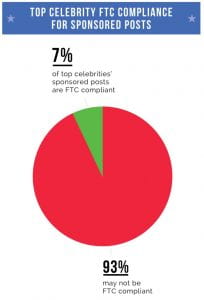

Have you ever heard of deepfake? The term deepfake comes from “deep learning,” a set of intelligent algorithms that can learn and make decisions on their own. By applying deep learning, deepfake technology replaces faces from the original images or videos with another person’s likeness.

What does deep learning have to do with switching faces?

Basically, deepfake allows AI to learn automatically from its data collection, which means the more people try deepfake, the faster AI learns, thereby making its content more real.

Deepfake enables anyone to create “fake” media.

How does Deepfake work?

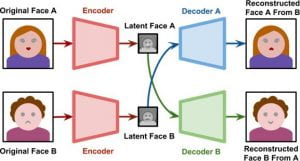

First, an AI algorithm called an encoder collects endless face shots of two people. The encoder then detects similarities between the two faces and compresses the images so they can be delivered. A second AI algorithm called a decoder receives the package and recovers it to reconstruct the images to perform a face swap.

Another way deepfake uses to swap faces is GAN, or a generative adversarial network. A GAN adds two AI algorithms against each other, unlike the first method where encoder and decoder work hand in hand.

The first algorithm, the generator, is given random noise and converts it into an image. This synthetic image is then added to a stream of real photos like celebrities. This combination of images gets delivered to the second algorithm, the discriminator. After repeating this process countless times, the generator and discriminator both improve. As a result, the generator creates completely lifelike faces.

For instance, Artist Bill Posters used deepfake technology to create a fake video of Mark Zuckerberg , saying that Facebook’s mission is to manipulate its users.

Real enough?

How about this. Consider having Paris Hilton’s famous quote, “If you don’t even know what to say, just be like, ‘That’s hot,’” replaced by Vladimir Putin, President of Russia. Those who don’t know either will believe that Putin is a Playboy editor-in-chief.

Yes, we can all laugh at these fake jokes. But when something becomes overly popular, it has to come with a price.

Originally, deepfake was developed by an online user of the same name for the purpose of entertainment, as the user had put it.

Yes, Deepfake meant pornography.

The biggest problem of deepfake is that it is challenging to detect the difference and figure out which one is the original. It has become more than just superimposing one face onto another.

Researchers found that more than 95% of deepfake videos were pornographic, and 99% of those videos had faces replaced with female celebrities. Experts explained that these fake videos lead to the weaponization of artificial intelligence used against women, perpetuating a cycle of humiliation, harassment, and abuse.

How do you spot the difference?

As mentioned earlier, the algorithms are fast learners, so for every breath we take, deepfake media becomes more real. Luckily, research showed that deepfake faces do not blink normally or even blink at all. That sounds like one easy method to remember. Well, let’s not get ahead of ourselves just yet. When it comes to machine learning, nearly every problem gets corrected as soon as it gets revealed. That is how algorithms learn. So, unfortunately, the famous blink issue already had been solved.

But not so fast. We humans may not learn as quickly as machines, but we can be attentive and creative, which are some qualities that tin cans cannot possess, at least for now.

It only takes extra attention to detect Deepfake. Ask these questions to figure out the magic:

Does the skin look airbrushed?

Does the voice synchronize with the mouth movements?

Is the lighting natural, or does it make sense to have that lighting on that person’s face?

For example, the background may be dark, but the person may be wearing a pair of shiny glasses reflecting the sun’s rays.

Oftentimes, deepfake contents are labeled as deepfake because creators want to display themselves as artists and show off their works.

In 2018, a software named Deeptrace was developed to detect deepfake contents. A deeptrace lab reported that deepfake videos are proliferating online, and its rapid growth is “supported by the growing commodification of tools and services that lower the barrier for non-experts—from well-maintained open source libraries to cheap deepfakes-as-a-service websites.”

The pros and cons of deepfake

It may be self-explanatory to name the cons, but here are some other risks deepfake imposes:

- Destabilization: the misuse of deepfake can destabilize politics and international relations by falsely implicating political figures in scandals.

- Cybersecurity: the technology can also negatively influence cybersecurity by having fake political figures incite aggression.

- Fraud: audio deepfake can clone voices to convince people to believe that they are talking to actual people and induce them into giving away private information.

Well then, are there any pros to deepfake technology other than having entertainment values? Surprisingly, a few:

- Accessibility: deepfake creates various vocal personas that can turn text into speech, which can help with speech impediments.

- Education: deepfake can deliver innovative lessons that are more engaging and interactive than traditional lessons. For example, deepfake can bring famous historical figures back to life and explain what happened during their time. Deepfake technology, when used responsibly, can be served as a better learning tool.

- Creativity: instead of hiring a professional narrator, implementing artificial storytelling using audio deepfake can tell a captivating story and let its users do so only at a fraction of the cost.

If people use deepfake technology with high ethical and moral standards on their shoulders, it can create opportunities for everyone.

Case

In a recent custody dispute in the UK, the mother presented an audio file to prove that the father had no right to take away their child. In the audio, the father was heard making a series of violent threats towards his wife.

The audio file was compelling evidence. When people thought the mother would be the one to walk out with a smile on her face, the father’s attorney thought something was not right. The attorney challenged the evidence, and it was revealed through forensic analysis that the audio was tailored using a deepfake technology.

This lawsuit is still pending. But do you see any other problems in this lawsuit? We are living in an era where evidence tampering is easily available to anyone with the Internet. It would require more scrutiny to figure out whether evidence is altered.

Current legislation on deepfake.

The National Defense Authorization Act for Fiscal Year 2021 (“NDAA”), which became law as Congress voted to override former President Trump’s veto, also requires the Department of Homeland Security (“DHS”) to issue an annual report for the next five years on manipulated media and deepfakes.

So far, only three states took action against deepfake technology.

On September 1, 2019, Texas became the first state to prohibit the creation and distribution of deepfake content intended to harm candidates for public office or influence elections.

Similarly, California also bans the creation of “videos, images, or audio of politicians doctored to resemble real footage within 60 days of an election.”

Also, in 2019, Virginia banned deepfake pornography.

What else does the law say?

Deep fakes are not illegal per se. But depending on the content, a deepfake can breach data protection law, infringe copyright and defamation. Additionally, if someone shares non-consensual content or commits a revenge porn crime, it is punishable depending on the state law. For example, in New York City, the penalties for committing a revenge porn crime are up to one year in jail and a fine of up to $1,000 in criminal court.

Henry Ajder, head of threat intelligence at Deeptrace, raised another issue: “plausible deniability,” where deepfake can wrongfully provide an opportunity for anyone to dismiss actual events as fake or cover them up with fake events.

What about the First Amendment rights?

The First Amendment of the U.S. Constitution states:

“Congress shall make no law respecting an establishment of religion, or prohibiting the free exercise thereof; or abridging the freedom of speech, or of the press; or the right of the people peaceably to assemble, and to petition the Government for a redress of grievances.”

There is no doubt that injunctions against deepfakes are likely to face First Amendment challenges. The First Amendment will be the biggest challenge to overcome. Even if the lawsuit survives, lack of jurisdiction over extraterritorial publishers would inhibit their effectiveness, and injunctions will not be granted unless under particular circumstances such as obscenity and copyright infringement.

How does defamation law apply to deepfake?

How about defamation laws? Will it apply to deepfake?

Defamation is a statement that injures a third party’s reputation. To prove defamation, a plaintiff must show all four:

1) a false statement purporting to be fact;

2) publication or communication of that statement to a third person;

3) fault amounting to at least negligence; and

4) damages, or some harm caused to the person or entity who is the subject of the statement.

As you can see, deepfake claims are not likely to succeed under defamation because it is difficult to prove that the content was intended to be a statement of fact. All that the defendant will need to protect themselves from defamation claims is to have the word “fake” somewhere in the content. To make it less of a drag, they can simply say that they used deep”fake” to publish their content.

Pursuing a defamation claim against nonconsensual deepfake pornography also poses a problem. The central theme of the claim is the nonconsensual part, and our current defamation law fails to address whether or not the publication was consented to by the victim.

To reflect our transformative impact of artificial intelligence, I would suggest making new legislation to regulate AI-backed technology like deepfake. Perhaps this could lower the hurdle that plaintiffs must face.

What are your suggestions in regards to deepfake? Share your thoughts!