From claims that the moon landing was faked to Area 51, the United States loves its conspiracy theories. In fact, a study sponsored by the University of Chicago found that more than half of Americans believe at least one conspiracy theory. While this is not a new phenomenon, the increasing use and reliance on social media has allowed misinformation and harmful ideas to spread with a level of ease that wasn’t possible even twenty years ago.

Individuals with a large platform can express an opinion that creates a harm to the people that are personally implicated in the ‘information’ being spread. Presently, a plaintiff’s best option to challenge harmful speech is through a claim for defamation. The inherent problem is that opinions are protected by the First Amendment and, thus, not actionable as defamation.

This leaves injured plaintiffs limited in their available remedies because statements in the context of the internet are more likely to be seen as an opinion. The internet has created a gap where we have injured plaintiffs and no available remedy. With this brave new world of communication, interaction, and the spread of information by anyone with a platform comes a need to ensure that injuries sustained by this speech will have legal recourse.

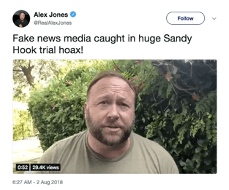

Recently, Alex Jones lost a defamation claim and was ordered to pay $965 million to the families of the Sandy Hook victims after claiming that the Sandy Hook shooting that occurred in 2012 was a “hoax.” Despite prevailing at trial, the statements that were the subject of the suit do not fit neatly into the well-established law of defamation, which makes reversal on appeal likely.

The elements of defamation require that the defendant publish a false statement purporting it to be true, which results in some harm to the plaintiff. However, just because a statement is false does not mean that the plaintiff can prove defamation because, as the Supreme Court has recognized, false statements still receive certain First Amendment protections. In Milkovich v. Lorain Journal Co., the Court held that “imaginative expression” and “loose, figurative, or hyperbolic language” is protected by the First Amendment.

The characterization of something as a “hoax” has been held by courts to fall into this category of protected speech. In Montgomery v. Risen, a software developer brought a defamation action against an author who made a statement claiming that plaintiff’s software was a “hoax.” The D.C. Circuit held that characterization of something as an “elaborate and dangerous hoax” is hyperbolic speech, which creates no basis for liability. This holding was mirrored by several courts including the District Court of Kansas in Yeagar v. National Public Radio, the District Court of Utah in Nunes v. Rushton, and the Superior Court of Delaware in Owens v. Lead Stories, LLC.

The other statements made by Alex Jones regarding Sandy Hook are also hyperbolic language. These statements include: “[i]t’s as phony as a $3 bill”, “I watched the footage, it looks like a drill”, and “my gut is… this is staged. And you know I’ve been saying the last few months, get ready for big mass shootings, and then magically, it happens.” While these statements are offensive and cruel to the suffering families, it is really difficult to characterize them as something objectively claimed to be true. ‘Phony’, ‘my gut is’, ‘looks like’, and ‘magically’ are qualifying the statement he is making as a subjective opinion based on his interpretation of the events that took place.

It is indisputable that the statements Alex Jones made caused harm to these families. They have been subjected to harassment, online abuse, and death threats from his followers. However, no matter how harmful these statements are, that does not make it defamation. Despite this, a reasonable jury was so appalled by this conduct that they found for the plaintiffs. This is essentially reverse jury nullification. They decided that Jones was culpable and should be held legally responsible even if there is no adequate basis for liability.

The jury’s determination demonstrates that current legal remedies are inadequate to regulate potentially harmful speech that can spread like wildfire on the internet. The influence that a person like Alex Jones has over his followers establishes a need for new or updated laws that hold public figures to a higher standard even when they are expressing their opinion.

A possible starting point for regulating harmful internet speech at a federal level might be through the commerce clause, which allows Congress to regulate instrumentalities of commerce. The internet, by its design, is an instrumentality of interstate commerce by enabling for the communication of ideas across state lines.

Further, the Federal Anti-Riot Act, which was passed in 1968 to suppress civil rights protestors might be an existing law that can serve this purpose. This law makes it a felony to use a facility of interstate commerce to (1) incite a riot; or (1) to organize, promote, encourage, participate in, or carry on a riot. Further, the act defines riot as:

[A] public disturbance involving (1) an act or acts of violence by one or more persons part of an assemblage of three or more persons, which act or acts shall constitute a clear and present danger of, or shall result in, damage or injury to the property of any other person or to the person of any other individual or (2) a threat or threats of the commission of an act or acts of violence by one or more persons part of an assemblage of three or more persons having, individually or collectively, the ability of immediate execution of such threat or threats, where the performance of the threatened act or acts of violence would constitute a clear and present danger of, or would result in, damage or injury to the property of any other person or to the person of any other individual.

Under this definition, we might have a basis for holding Alex Jones accountable for organizing, promoting, or encouraging a riot through a facility (the internet) of interstate commerce. The acts of his followers in harassing the families of the Sandy Hook victims might constitute a public disturbance within this definition because it “result[ed] in, damage or injury… to the person.” While this demonstrates one potential avenue of regulating harmful internet speech, new laws might also need to be drafted to meet the evolving function of social media.

In the era of the internet, public figures have an unprecedented ability to spread misinformation and incite lawlessness. This is true even if their statements would typically constitute an opinion because the internet makes it easier for groups to form that can act on these ideas. Thus, in this internet age, it is crucial that we develop a means to regulate the spread of misinformation that has the potential to harm individual people and the general public.