Advances in technology have forever changed education. More than ever, schools utilize online and interactive forums to enhance the learning process.

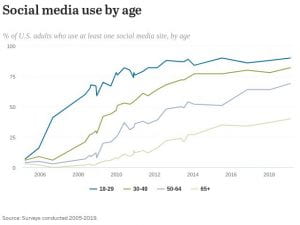

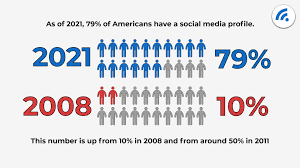

School social media pages are often used to communicate upcoming events and other news to large numbers of people who have an interest in organization while groups are designed to create communities of people. Students, parents and schools utilize social media to convey messages for widespread dissemination. The superintendent may want to give updates on the newest COVID restrictions, teachers communicate about the upcoming class trip and parents find a pedagogical group setting. Not to mention, Pace Law students generously share that updated syllabus before the night’s class.

In today’s world, being literate means more than just being able to read and write; we also need to know how to effectively navigate and find information online, operate a computer, use the digital medium for learning, manage online identity(s), communicate online, understand online social norms, and collaborate. Benefits of utilizing social media for educational use include creating a sense of community and promoting collaboration, enhancing communication, developing computer literacy and language skills, and incorporating student culture into the learning environment while allowing for a connection between persons abroad.

In turn, technology companies obtain a significant amount of personal information about the user, creating concerns in the educational realm about student safety and privacy which can, at times, fall under the Family Educational Rights and Privacy Act (FERPA), that was created to protect students’ personally identifiable information (PII) from unauthorized disclosure. Other rules and acts that attempt to protect educational records include Children’s Online Privacy Protection Act of 1998, (COPPA) and Internet and Protection of Pupil Rights Amendment (PPRA). FERPA does not require educational institutions to adopt specific security controls, posing a risk for student privacy. To complicate the matter of what student information should be protected, the school official exception of FERPA allows school districts to disclose PII to a third party provider serving a legitimate educational interest. However, FERPA may not be equipped to deal with modern data collection of student records and information to protect it when schools increasingly use third-party applications to provide valuable educational tools.

Online educational services collect a large amount of transactional data a/k/a “metadata” which refers to information about how long a particular student took to perform an online task, how many attempts the student made, and how long the student’s mouse hovered over an item, potentially indicating indecision. This can be a useful tool to educators but at what cost to students?

The potential exists for educational platforms to allow third-party data harvesting. Through contracts with educational institutions, Google provided a large range of products and services including Google Meet, Duo, Google Messaging, Gmail and the ability to share documents. A 2014 litigation case against Google included allegations that the company collected student emails and metadata to create secret user profiles for its advertising tools without consent. Similar to the facts when Facebook provided access to Cambridge Analytica.

In 2019 the FTC and Facebook settled on a $5 billion penalty for Facebook’s violations of previously issued FTC orders related to Facebook’s privacy practice.

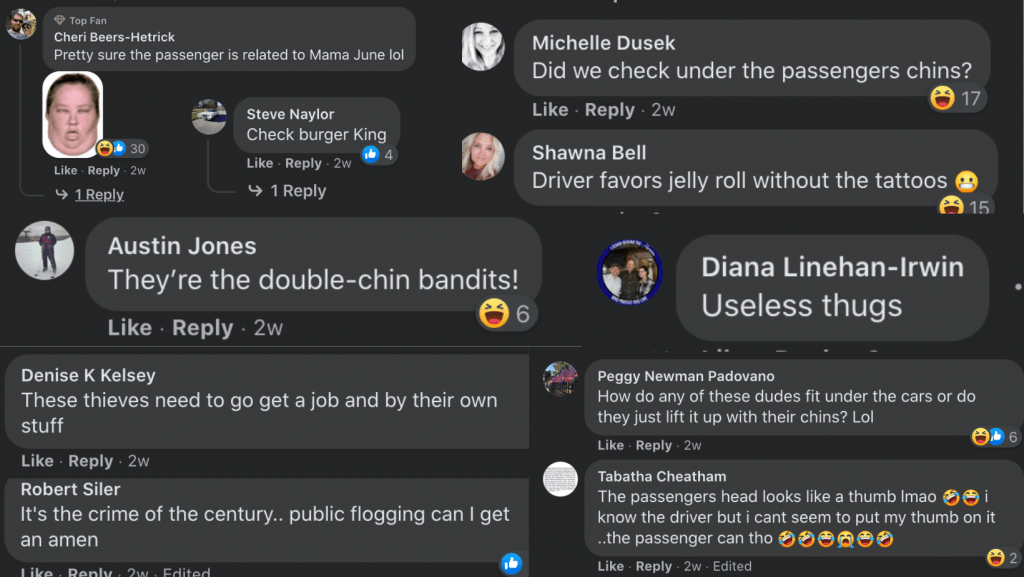

When one visits a school’s Facebook page, the amount of time spent, and the “comments” and “likes” left, can become part of the data profile that Facebook has created for them. A school district page or group gives Facebook “another data point” on the users who visit it, said Doug Levin, the founder and president of EdTech Strategies, LLC, and some districts also have Facebook trackers on their district sites. Levin’s recent research report, “Tracking: EDU—Education Agency Website Security and Privacy Practices,” found that more than 25% of the 159 school websites he studied had embedded user tracking tools that reported information back to Facebook. These trackers can use information about users’ browsing history and other activity across the web—not just on Facebook—to target ads and most school district websites don’t disclose in their privacy policies that the data sharing is taking place. Sound familiar?

Cambridge Analytica, the political consulting firm, used data gathered in a personality quiz administered through Facebook to create voter profiles. Some 270,000 users downloaded the quiz app which then gave access to their profiles, and ultimately, access to their friends’ profiles and according to Facebook, affected 87 million users. Because of the information being inappropriately used surrounding the 2016 Presidential election scandal, Cambridge Analytica LLC recently filed in United States Bankruptcy Court.

All users and administrators should be educated on what data is collected and how that data is used. Schools need to maintain awareness of current state, federal and other pertinent laws that affect data and online educational services. Parents and students need to be aware of which online educational services are being used. Transparency needs to go both ways and students have an obligation to ask and ultimately know what data is being collected and how that data is being used.

One education research organization, the National Education Policy Center, has deleted its Facebook page entirely—and is suggesting schools do the same until companies are subject to greater accountability and transparency. Is that really an option though, with how big the services have become? Is there a happy (and safe) medium between writing on slate boards and sharing everything? Can Facebook play nice in the sand box and follow FTC orders? Does their cost benefit analysis favor repeated violations?

Perhaps FERPA, and other similar acts, need to be updated to include online learning programs and protection from data breach situations as seen with Facebook, Google and Cambridge Analytica.