Police departments across the country are calling keyboard warriors into action to help them solve crimes…but at what cost?

In a survey of 539 police departments in the U.S., 76% of departments said that they used their social media accounts to solicit tips on crimes. Departments post “arrested” photos to celebrate arrests, surveillance footage for suspect identification, and some even post themed wanted posters, like the Harford County Sheriff’s Office.

The process for using social media as an investigative tool is dangerously simple and the consequences can be brutal. A detective thinks posting on social media might help an investigation, so the department posts a video or picture asking for information. The community, armed with full names, addresses, and other personal information, responds with some tips and a lot of judgmental, threatening, and bigoted comments. Most police departments have no policy for removing posts after information has been gathered or cases are closed, even if the highlighted person is found to be innocent. A majority of people who are arrested are not even convicted of a crime.

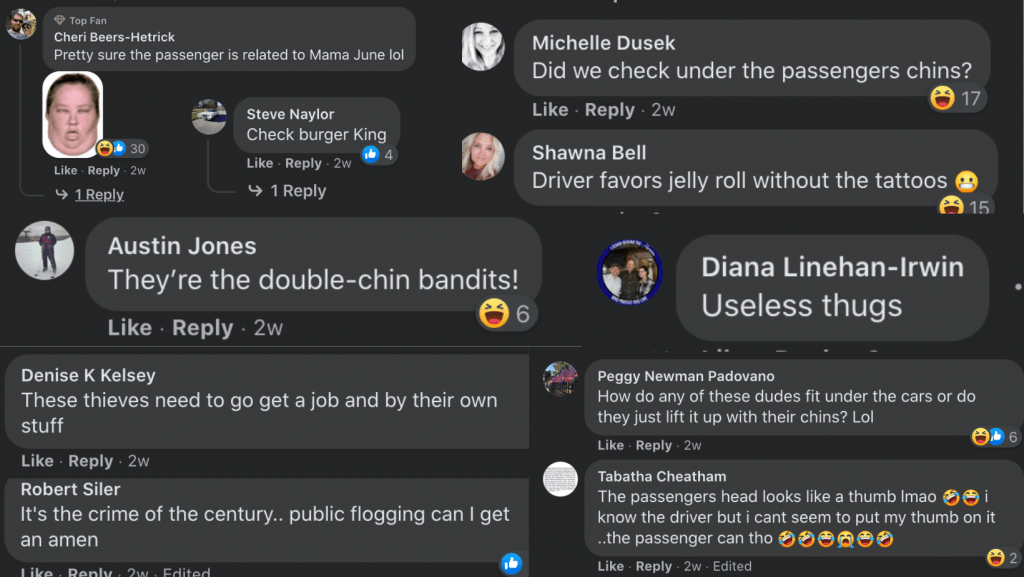

Law enforcement’s use of social media in this way threatens the presumption of innocence, creates a culture of public humiliation, and often results in a comment section of bigoted and threatening comments.

On February 26, 2020, the Manhattan Beach Police Department posted a mugshot of Matthew Jacques on their Facebook and Instagram pages for their “Wanted Wednesday” social media series. The pages have 4,500 and 13,600, mostly local, followers, respectively. The post equated Matthew to a fugitive and commenters responded publicly with information about where he worked. Matthew tried to call off work out of fear of a citizen’s arrest. The fear turned out to be warranted when two strangers came to find him at his workplace. Matthew eventually lost his job because he was too afraid to return to work.

You may be thinking this is not a big deal. This guy was probably wanted for something really bad and the police needed help. After all, the post said the police had a warrant. Think again.

There was no active warrant for Matthew at the time, his only (already resolved) warrant came from taking too long to schedule remedial classes for a 2017 DUI. Matthew was publicly humiliated by the local police department. The department even refused to remove the social media posts after being notified of the truth. The result?

Matthew filed a complaint against the department for defamation (as well as libel per se and false light invasion of privacy). Typically, defamation requires the plaintiff to show:

1) a false statement purporting to be fact; 2) publication or communication of that statement to a third person; 3) fault amounting to at least negligence; and 4) damages, or some harm caused to the person or entity who is the subject of the statement.

Here, the department made a false statement – that there was a warrant. They published it on their social media, satisfying the second element. They did not check readily available public records that showed Matthew did not have a warrant. Finally, Matthew lived in fear and lost his job. Clearly, he was harmed.

The police department claimed their postings were protected by the California Constitution, governmental immunity, and the 1st Amendment. Fortunately, the court denied the department’s anti-SLAPP motion. Over a year after postings, the department took down the posting and settled the lawsuit with Matthew.

Some may think that Matthew’s case is an anomaly and that, usually, the negative attention is warranted and perhaps even socially beneficial because it further de-incentivizes criminal activity via humiliation and social stigma. However, most arrests don’t result in convictions, many of the police’s cyberbullying victims are likely innocent. Even if they are guilty, leaving these posts up can increase the barrier to societal re-entry, which can increase recidivism rates. A negative digital record can make finding jobs and housing more difficult. Many commenters assume the highlighted individual’s guilt and take to their keyboards to shame them.

Here’s one example of a post and comment section from the Toledo Police Department Facebook page:

Unless departments change their social media use policies, they will continue to face defamation lawsuits and continue to further the degradation of the presumption of innocence.

Police departments should discontinue the use of social media in the humiliating ways described above. At the very least, they should consider using this tactic only for violent, felonious crimes. Some departments have already changed their policies.

The San Francisco Police Department has stopped posting mugshots for criminal suspects on social media. According to Criminal Defense Attorney Mark Reichel, “The decision was made in consultation with the San Francisco Public Defender’s Office who argued that the practice of posting mugshots online had the potential to taint criminal trials and follow accused individuals long after any debt to society is paid.” For a discussion of some of the issues social media presents to maintaining a fair trial, see Social Media, Venue and the Right to a Fair Trial.

Do you think police departments should reconsider their social media policies?